Why Districts Fail to Track Individual Technology Program Results—and How They Can Start

Federal Programs departments, the custodians of Title I funding, play a crucial role in determining the allocation of resources to improve math and reading proficiency in K-12 schools. Yet, a glaring gap exists: most districts fail to consistently track the specific impact of individual educational technology programs, such as LGL Edge, iReady, or IXL, on student outcomes. Why is this the case, and how can districts begin to address this challenge?

The Problem: Why Tracking Falls Short

The lack of tracking isn’t due to a simple reluctance to confront results. While it’s true that some educators might fear the scrutiny of data, the underlying issues are more nuanced:

Small Sample Sizes at School Levels

Tracking results for specific programs often involves small subsets of students, particularly in individual schools. Such small samples make it statistically challenging to draw meaningful conclusions. For instance, if only a small group of students uses a given program, their performance on state tests may not provide sufficient data to prove or disprove its efficacy.

Limitations of Grade-Level Assessments

State tests are designed to measure grade-level proficiency, not growth equitably across all abilities. A student significantly behind grade level might show measurable improvement when using a program, but their state test scores might not reflect this progress if they remain below grade level.

Resource Constraints

Many district testing departments have experienced budget cuts over the years, resulting in fewer staff members capable of managing the complex data analysis required to assess program efficacy accurately.

Why Traditional Metrics Fall Short

The limitations of widely-used assessments exacerbate the problem. Tools like state summative tests, which report scaled scores (e.g., 725 or 750), are not designed to capture detailed growth in students who are far below or above grade level. This is particularly evident in diagnostic assessments like ADAM, which highlight the discrepancy between grade-level state tests and vertically-aligned diagnostics.

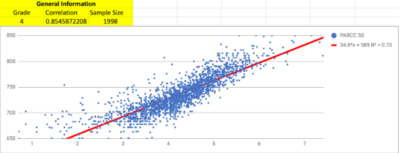

Take, for example, the graph shown below comparing PARCC math test data with ADAM diagnostic results. While PARCC measures grade-level proficiency, ADAM assesses instructional levels (the x-axis representing grade level scores), often spanning several grade levels above or below the student’s current grade. As a result, students at the lower proficiency spectrum on state tests might show scattered, non-informative data points. This scattering is particularly evident between the 600 and 700 scaled score range, where the PARCC fails to capture the nuanced growth of students who are “far below” in ability.

The Solution: Embracing Precision Tools

To overcome these challenges, districts can take actionable steps:

Adopt Dual-Purpose Assessments

Precision diagnostic tools like ADAM and DORA offer vertically-aligned scoring, allowing educators to track progress regardless of a student’s grade level. Unlike state tests, these tools measure growth across the full spectrum of a student’s abilities, from far below to above grade level. This capability ensures no progress is overlooked.

Leverage Technology for Analysis

Statistical analysis, once the domain of specialized experts, can now be conducted using advanced data tools integrated into educational platforms. This reduces the burden on understaffed testing departments.

Set Clear Pre- and Post-Testing Protocols

Federal Program directors should require schools to implement pre- and post-assessments for all technology programs. This ensures data is collected consistently, providing a foundation for meaningful analysis.

Next Steps for Federal Program Directors

Federal Program directors can lead the way by:

- Identifying Robust Assessment Tools: Look for tools that provide both diagnostic and summative insights. Computer-adaptive tests are not necessarily diagnostic—scrutinize tools like ADAM and DORA to ensure they align with your district’s needs.

- Mandating Data Collection: Require schools to report pre- and post-test results for all funded programs, enabling accountability and evidence-based decision-making.

- Training Site Administrators to Be Instructional Leaders: Provide professional development on using Excel or Google Sheets to measure growth and interpreting diagnostic data to guide instruction effectively.

By prioritizing precise, growth-oriented tools and standardized data protocols, districts can transform how they measure the impact of educational technology, ensuring that every dollar invested in student achievement delivers meaningful returns.

Leave A Comment